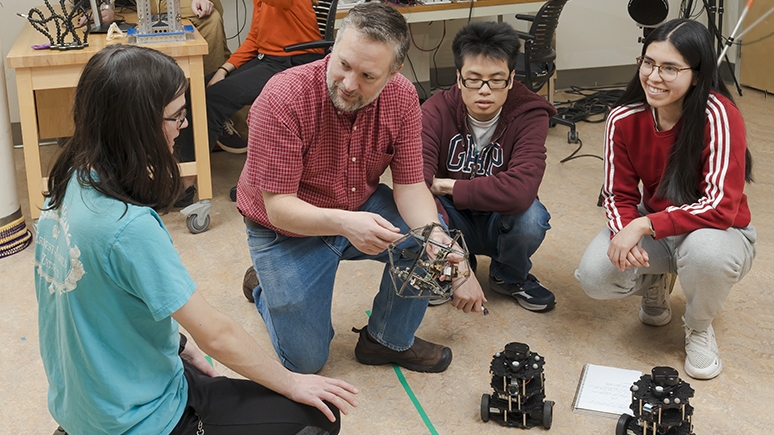

John Rieffel, professor of computer science and department chair, works with students on tensegrity robots in the CROCHET Lab. Rieffel's research uses AI to optimize movements of these “soft robots”—composed of rigid struts and springs—that have potential applications in search-and-rescue, space exploration and surgery.

Across Union’s campus—in classes, labs and independent research—students are learning about generative artificial intelligence.

Students and faculty are considering AI’s impact on society. What is AI exactly, and is it actually intelligent? When should we use it, and how might it help? When should we not, and what could go wrong?

Union’s commitment to interdisciplinary education makes it a great place to tackle these big questions. Faculty and students are leveraging connections between themselves and their areas of expertise to traverse these uncharted technological waters.

What is AI?

AI covers a huge swath of computer science and takes many forms.

Some researchers say that AI is all the things that we don’t know how to do with a computer, and once we do, they are no longer AI, said John Rieffel, professor of computer science. “One of the ironies is that throughout the history of AI, we have been moving the goalposts.”

For a long time, the pinnacle of AI was getting a computer to play tic tac toe, he added. Then came checkers. Then chess.

Things that we think should be easy for a computer are quite hard—like getting generative AI to produce plausible images or relatable language.

When an image is so very, very close to lifelike, but not quite, people recognize it instantly—often in the form of an unsettling feeling computer scientists have dubbed “the uncanny valley.”

“The more human-like something behaves, the freakier and more alarming the imperfections,” Rieffel said. “The human brain is very good at detecting imposters or when things don’t quite match.”

Andrew Burkett, professor of English and co-director of the Templeton Institute, asks students in his first-year inquiry course, “Humans & Nonhumans: Challenges from Artificial Intelligence and Biotechnology,” to compare their own writing to machine-generated texts analyzing course readings.

Students are often “bowled over” by ChatGPT’s inaccuracies and incomplete arguments compared to their own writing.

“At the same time, we stop to think about the ways that we might use ChatGPT as a starting point to generate a few outlines,” said Burkett, who also acknowledges that “ChatGPT has an excellent ability to summarize brief bursts of information from massive web searches.”

Is AI actually intelligent?

Defining AI has been a subject of debate since it was first introduced by mathematicians in the 1950s.

“Some say that with AI we are talking about something that is truly intelligent in a human way,” said Kristina Striegnitz, associate professor of computer science. “But we don’t have tools to measure that.”

“Some have used AI more practically as a research area and AI is a label,” she continued. “But most people, including those who work on it, would say AI is not actually intelligent.”

Is there a sense that every new generation of AI is more “intelligent” than the last?

“A lot of research now goes into explainable AI. A large language model is a black box. It’s hard for humans to explain how the output was produced. For critical applications—like healthcare— it would be good to have an explanation as to how the solution came about.”

How should we use AI?

Responsibly. And with our eyes wide open.

“The tech itself isn’t good or bad—it’s a tool. It’s humans who are capable of evil. Like a 3D printer in the hands of someone who wants to print a ghost gun, or generative AI in the hands of someone who wants to steal money by impersonating someone,” said Rieffel.

“We want students to understand that technology has consequences,” he added. “When you’re developing a new technology, you need to think about what’s the worst thing that can happen. What can go wrong?”

Rieffel teaches a class on cyborgs with professors Jen Mitchell of English and Kate Feller of biology. Students consider that data from personal devices is retained by the vendor and sometimes shared publicly with consequences. Data from a period-tracking app could be used to prosecute women who have had an abortion and live in states that have criminalized the procedure. Apps like Strava that track and share a runner’s activity have been used by the Taliban to locate U.S. military installations in Afghanistan.

Other problems with AI may come from the data used to train it.

The 2020 documentary Coded Bias begins with the discovery by an MIT researcher that facial recognition technology does not accurately see dark-skinned faces. It goes on to investigate the discrimination of minorities and women that AI brings to a number of areas, including education, healthcare, housing and employment.

“You don’t have to spend much time on the internet to realize there is a lot of sexism and racism, and AI is trained from the internet,” Rieffel said.

Nick Webb, an associate professor of computer science who specializes in computational linguistics, argues that there is no such thing as unbiased data. For example, economic data gathered in the 1980s accounted for only two genders.

“If you are not aware of the inclusion or omission of data, then the analysis becomes suspect,” he said. “Where did the data come from? How well do I understand that it contains all the information that I need? What are the biases? If I don’t understand that, then I can’t draw meaningful and useful conclusions.”

“The appeal of teaching machine learning—the basis of AI—at a liberal arts college is the ability to ask these questions to reflect on humanity and how we can and should be using these tools,” Webb continued. “Or more importantly, how we should not be using these tools.”

Rieffel echoed these sentiments.

“The value of learning about artificial intelligence at a liberal arts college is that you can better contextualize the implications of technology,” he said. “You can take classes in computer science, philosophy, economics or English and take a step back to understand all the implications. Being a good citizen of the world is understanding the context in which technology exists.”

Burkett agrees.

“I want Union graduates to be able to evaluate AI critically through the lens of their fine-tuned liberal arts education, but I also want to help them understand some basic ways to use such tools to save time and work in their lives,” he said. “We all need to find some middle path here between the hype and the hysteria around AI. Historically speaking, like all new media and other technologies, AI presents both risks and affordances.”